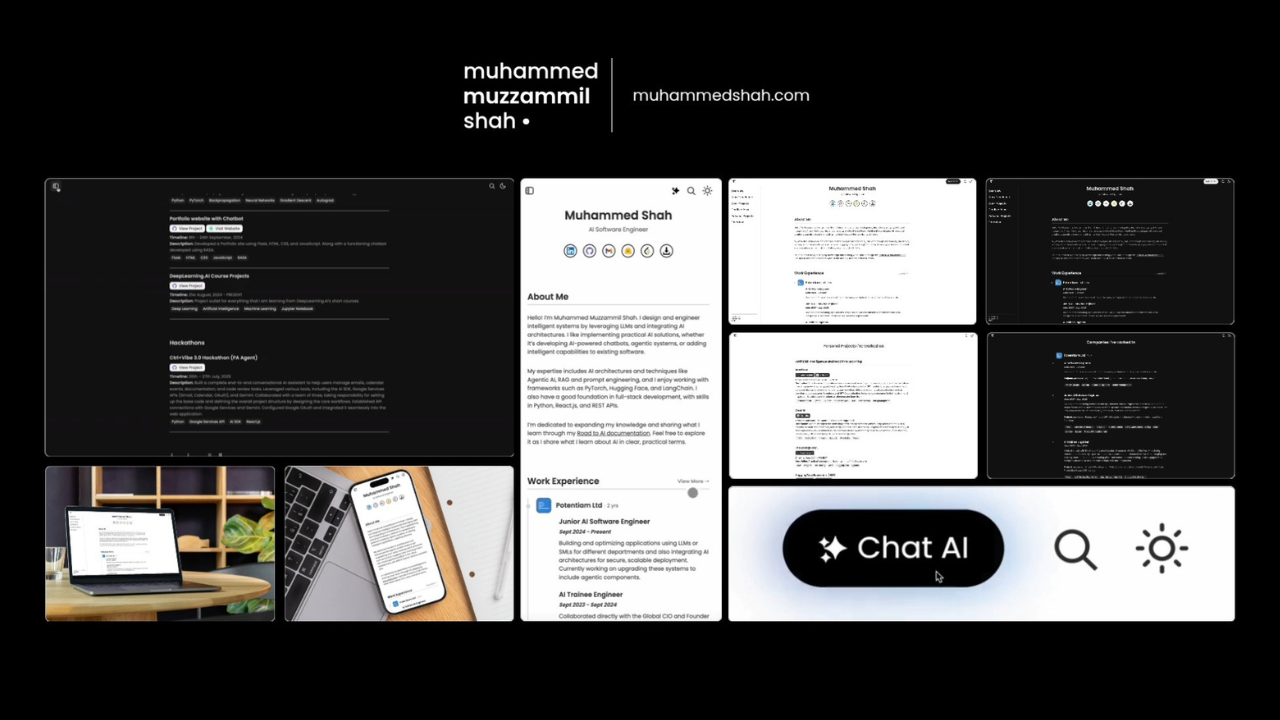

Muhammed Shah

AI Software Engineer

About Me

My Space View More →

Work Experience View More →

Potentiam Ltd

AI Software Engineer

Sept 2025 - Present

Leading a focused AI team to design and deploy scalable AI solutions across multiple projects, while also creating and delivering training programs to help the company build AI skills and adoption.

Junior AI Software Engineer

Sept 2024 - Sept 2025

Building and optimizing applications using LLMs or SMLs for different departments and also integrating AI architectures for secure, scalable deployment.

AI Trainee Engineer

Sept 2023 - Sept 2024

Collaborated directly with the Global CIO and Founder on AI-driven initiatives, focusing on open source AI R&D, pilot implementations and cybersecurity operations.

Wipro Ltd

AI Intern

Mar 2023 - Jun 2023

Developed a pilot for interactive data insight conversations and researched AI observability, ML monitoring, root cause analysis, anomaly detection, and capacity forecasting.

Work Projects View More →

Private Enterprise GPT →

Designed, configured, and deployed a secure in-house Private GPT system on Ubuntu with Nginx reverse proxy, SSL, and custom domain mapping. Integrated Microsoft OAuth authentication to ensure access only to company users, automated backend services for resilience, and enabled GPU powered remote access via VNC. Migrated all AI interactions in-house, ensuring full data privacy and operational reliability.

AI Search →

Developed an AI powered search widget with an “Ask AI” feature for instant, accurate querying of company salary and HR data, leveraging a RAG system with FAISS embeddings, LangChain orchestration, and Llama3.x models. Optimized embedding accuracy, reduced hallucinations, and introduced caching for speed, deploying the solution as a JavaScript widget via IIS for seamless internal integration.

Intelligence Framework →

Engineered an all-in-one intelligence framework for HR and Talent Acquisition, featuring a LLM driven resume reviewer, job spec generator, chatbot, and resume formatter with advanced UI and batch processing. Utilized local LLMs via Ollama, LangChain pipelines, and MongoDB for fast, secure, and accurate candidate evaluation, automation, and internal data generation.

Chatbot Development →

Led the creation of an advanced company chatbot widget, powered by a proprietary SLM trained on internal data and later enhanced with LLM (Ollama) fallbacks. Delivered features like file downloads, speech-to-text, custom UI, and robust conversational management. Migrated from Rasa Open Source to Rasa Pro CALM for advanced context switching and ensured scalable, low latency responses for dynamic business needs.

Skills

Certifications View More →

Agentic AI

Issued by DeepLearning.AI - Nov 2025

MCP: Build Rich-Context AI Apps

Issued by DeepLearning.AI - Sept 2025

Open Source Models with Hugging Face

Issued by DeepLearning.AI - Aug 2024

Prompt Engineering for Developers

Issued by DeepLearning.AI - Aug 2024

Build LLM Apps with LangChain.js

Issued by DeepLearning.AI - July 2024

Developing AI Applications with Python and Flask

Issued by IBM - Mar 2024

Generative AI with Large Language Models

Issued by Coursera - Feb 2024

Career Essentials in Generative AI

Issued by Microsoft and LinkedIn - Jul 2023

Personal Projects View More →

Chat AI →

Built and deployed a React-based AI assistant on my personal website using the AI SDK, enabling multi-step reasoning, tool use, and real time responses with seamless UI/UX and dynamic knowledge updates.

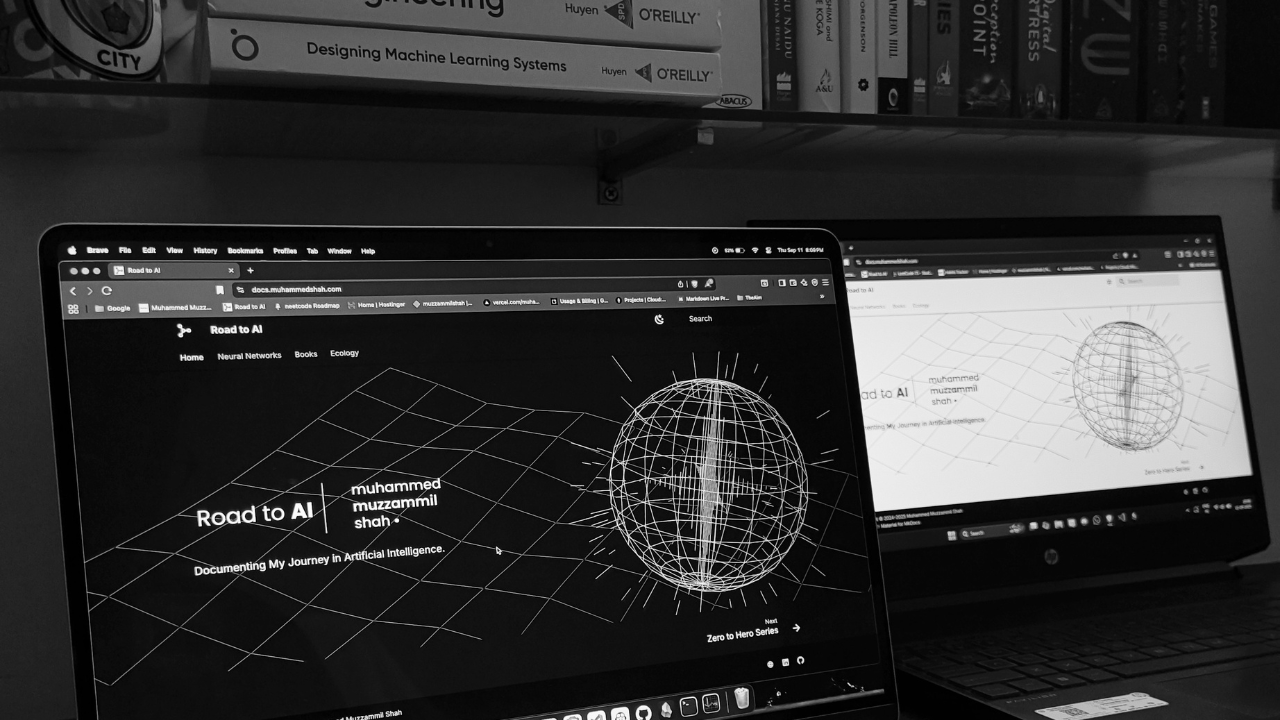

Road to AI →

Developed a comprehensive documentation site covering practical neural network and GPT model implementations, foundational ML concepts, and advanced topics like transformers and tokenization, using Python and PyTorch.

Transformer Model: GPT →

Created a GPT style transformer in PyTorch from scratch, progressing from a simple bigram model to a full transformer with multi-head attention, feedforward layers, and text generation capabilities.

Neural Networks: Makemore →

Built neural networks of different language models progressing from a basic bigram and MLP-based character level predictor to deeper architectures with batch normalization, manual backpropagation, and a WaveNet inspired convolutional network.

Neural Networks: Micrograd →

Built a neural network library from the ground up in Python, implementing backpropagation, gradient descent, and autograd features to understand neural network fundamentals.

Education View More →

MVJ College of Engineering (Affiliated with VTU), 2023 Graduate

BTech - Computer Science

Grade: 9.32 CGPA

Achievements: Top 10 University Rank Holder in CSE Department, Director of Design at TEDxMVJCE, Vice President at Saahitya Literature Club

Primus PU College, 2019 Graduate

PUC - Physics, Chemistry, Math and Computer Science

Grade: 86.5%, Distinction

St.Peter's School, 2017 Graduate

I.C.S.E - Science

Grade: 92.4%

Achievements: Top Rank Holder in Computer Applications.